< 5 LVM Snapshots | Commands | 7 LVM Attributes >

33 & 34. Recovering a Faulty Disk

https://www.udemy.com/course/a-complete-guide-on-linux-lvm/learn/lecture/13062490#overview

- Overview

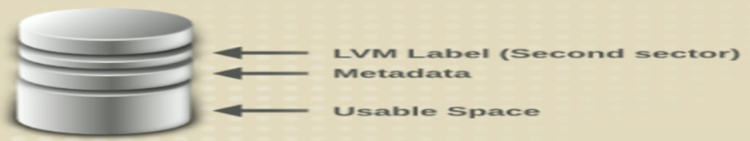

- It is possible for the volume group metatdata area of a physical volume to be over-written or deleted.

- An error message is displayed indicating the metadata area is incorrect or the system was unable to find a physical volume with the particular UUID.

- Symptoms

- lvs -a -o +devices

- Couldn’t find device with uuid ‘gobblety-gook….’

- Couldn’t find all physical volumes for volume group VG

- lvs -a -o +devices

34 Lab

https://www.udemy.com/course/a-complete-guide-on-linux-lvm/learn/lecture/13062660#overview

# view # of PV in a VG vgs VG #OV #LV #SN ... VG 3 1 0 ... # display information about the PEs vgdisplay -v /dev/VG ... --- Physical Volumes --- PV Name /dev/sdg1 PV UUID AOSIDq-alE5-... PV Status allocatable Total PE / Free PE 511 / 255 PV Name /dev/sdh1 PV UUID B1SmDq-blEy-... PV Status allocatable Total PE / Free PE 511 / 255 PV Name /dev/sdi1 PV UUID C2SIkq-clEp-... PV Status allocatable Total PE / Free PE 511 / 255

Purposefully ruin a drive’s metadata

dd if=//dev/zero of=/dev/sdg1 bs=1024 count=1 # Look at the info again vgdisplay -v /dev/VG ... --- Physical Volumes --- PV Name unknown device PV UUID AOSIDq-alE5-... PV Status allocatable Total PE / Free PE 511 / 255 lvs -a -o +devices WARNING: Device for PV AOSIDq-alE5-... not found or rejected by a filter ... data LV -wi-a---p- 1.00g unknown device(0)

How to correct

# First install a new disk # # Partially unmount the filesystem vgchange -an --partial # Update the new disk with the old UUID and copy the data pvcreate --uuid "AOSIDq-alE5-..." --restorefile /etc/lvm/backup/VG /dev/sdg1 # Restore the remaining metadata vgcfgrestore /dev/VG # Activate the drive vgchange -a y /dev/VG # Verify everything is working vgdisplay -v /dev/VG ... --- Physical Volumes --- PV Name /dev/sdg1 PV UUID AOSIDq-alE5-... PV Status allocatable Total PE / Free PE 511 / 255

Using the LVM backup file

# change to the LVM backup directory sudo su cd /etc/lvm/backup/VG # Show your volume groups vgs VG #PV #LV #SN Attr VSize VFree myVG 1 1 0 wz--n- 196.96g 0 # edit that file cat myVG

Actual File data from my lab

# Generated by LVM2 version 2.02.180(2)-RHEL7 (2018-07-20): Mon Apr 6 17:42:57 2020

contents = "Text Format Volume Group"

version = 1

description = "Created *after* executing 'lvcreate -n cc138e33-c171-6d3f-e559-f9649d47ad75 -L 201688 XSLocalEXT-cc138e33-c171-6d3f-e559-f9649d47ad75'"

creation_host = "citrix01" # Linux citrix01 4.19.0+1 #1 SMP Wed Dec 4 13:59:08 UTC 2019 x86_64

creation_time = 1586212977 # Mon Apr 6 17:42:57 2020

XSLocalEXT-cc138e33-c171-6d3f-e559-f9649d47ad75 {

id = "PQfRRm-HJvu-2L3r-ohPX-tWaj-azeD-mwIaxj"

seqno = 2

format = "lvm2" # informational

status = ["RESIZEABLE", "READ", "WRITE"]

flags = []

extent_size = 8192 # 4 Megabytes

max_lv = 0

max_pv = 0

metadata_copies = 0

physical_volumes {

pv0 {

id = "14dfcf-uhNt-wav7-d1jH-hdOH-fGCr-nABNQi"

device = "/dev/sda3" # Hint only

status = ["ALLOCATABLE"]

flags = []

dev_size = 413084303 # 196.974 Gigabytes

pe_start = 22528

pe_count = 50422 # 196.961 Gigabytes

}

}

logical_volumes {

cc138e33-c171-6d3f-e559-f9649d47ad75 {

id = "PpOE7h-qUkF-grNR-2zxi-GMZC-S9HQ-mKhJY6"

status = ["READ", "WRITE", "VISIBLE"]

flags = []

creation_time = 1586212977 # 2020-04-06 17:42:57 -0500

creation_host = "citrix01"

segment_count = 1

segment1 {

start_extent = 0

extent_count = 50422 # 196.961 Gigabytes

type = "striped"

stripe_count = 1 # linear

stripes = [

"pv0", 0

]

}

}

}

}